In the last post we took our first step in the mathematical introduction to classical Mechanics. In this post we’ll introduce a few physical concepts and some more mathematical tools.

— 1. Velocity and Acceleration —

The first thing to notice is that in Physics velocity is a vector concept. That means that in order for us to specify the velocity of an entity we must not only indicate its magnitude but also its direction.

First of we need to define a frame.

| Definition 1

A frame is a set of axis and a special point that is taken as the origin that allows one to describe the motion of a particle. |

When to get to real Physics we’ll talk a little bit more of what is a particle in Physics but for now we’ll just leave it to intuition and/or background knowledge.

If we trace the position of a particle for a given period of time what we get is curve whose parameter is time and this curve has the name of trajectory.

If we want to keep track of the variations of the position of a particle when it is describing a given trajectory we can do that using the concept of displacement.

Definition 2 Displacement is the difference between the final and initial positions of a particle. It is represented by the symbol  and is calculated in the following way: and is calculated in the following way:

|

Now we all know that when a body describes a trajectory some parts of it may take a longer time and others a shorter time. To rigorously describe the notion of a particle we have to take into account its variability in the motion and we do this by introducing the concept of velocity.

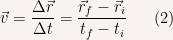

| Definition 3

The average velocity of a particle is the rate of change in position of a particle for a given time interval.

|

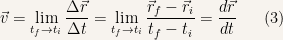

| Definition 4

Sometimes we aren’t interested in the average velocity and we want to know what is the velocity of a particle in a given instant. Intuitively we know that if we measure the average velocity in a sufficiently small interval we won’t be making a very big error in determining the value of the velocity.

If we let the time interval go to to zero what the have is the velocity of the particle for the instant considered. We can be rest assured that such a limit always exist since we are dealing with real bodies and their movements are always smooth.

|

Another way to mathematically define the average velocity and velocity is to make a change of variable and define  and calculate

and calculate  ,

,  and

and  .

.

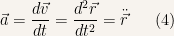

The other quantity of physical interest is average acceleration and it is the the rate of change in velocity of a particle in a given time interval.

The definitions are analogous to the ones of average velocity and we just have to change  to

to  .

.

We’ll just state the equation for acceleration here:

Where  is the so called Newton notation and indicates that we are differentiating

is the so called Newton notation and indicates that we are differentiating  two times with respect to

two times with respect to  . Obviously that one dot indicates that we are differentiating one time, and etc.

. Obviously that one dot indicates that we are differentiating one time, and etc.

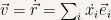

If we use Cartesian coordinates we know that it is  . Since our basis vectors are fixed it follows that

. Since our basis vectors are fixed it follows that  and that

and that  .

.

— 2. Curvilinear Coordinates —

Sometimes to use Cartesian Coordinates in order to describe the motion of a particle is very awkward and one instead uses some other kind of curvilinear coordinates. The ones that are most used are, polar coordinates, cylindrical coordinates and spherical coordinates.

— 2.1. Polar Coordinates —

If we are dealing with two dimensional problems that have circular symmetry then the best way to deal with them is to use polar coordinates.

In this system of coordinates instead of the  and

and  coordinates, one uses a radial coordinate

coordinates, one uses a radial coordinate  that expresses the distance that a point is from the origin and an angular coordinate

that expresses the distance that a point is from the origin and an angular coordinate  . This angular coordinate is measured from the

. This angular coordinate is measured from the  axis to the position vector in counter clockwise direction.

axis to the position vector in counter clockwise direction.

The range of values for these two variables are  and

and  . Notice that in

. Notice that in

isn’t defined and thus the origin can be thought to be a kind of singularity, but this is only so because we chose a particular set of coordinate and it isn’t intrinsic to the point itself.

isn’t defined and thus the origin can be thought to be a kind of singularity, but this is only so because we chose a particular set of coordinate and it isn’t intrinsic to the point itself.

As indicated in the picture the transformation of coordinates is:

— 2.2. Cylindrical Coordinates —

Sometimes when dealing with three dimensional problems we have to deal with circular symmetry around a certain axis. If this happens the most apt way to deal with the said problem is to use cylindrical coordinates.

Again we’ll use a mix of radial distances and angular distances. First we’ll only think about the plane formed by the  and

and  axes. In this plane we’ll project the position vector of a given point. The angle between

axes. In this plane we’ll project the position vector of a given point. The angle between  and this projection is

and this projection is  . The other two coordinates are

. The other two coordinates are  which is the distance between the point in question and the origin, and

which is the distance between the point in question and the origin, and  (or

(or  ).

).

The range of values for these three coordinates are  ,

,  and

and  ,

,

As indicated in the pictures the transformation of coordinates is:

— 2.3. Spherical Coordinates —

The last kind of curvilinear coordinates that we’ll look at are spherical coordinates. This kind of coordinates are extremely useful when a problem has spherical symmetry.

This time we’ll have one radial coordinate and two angular coordinate. Like we did for cylindrical coordinates first we’ll project the position vector into the  ,

,  plane and define

plane and define  to be the angle between

to be the angle between  and the projection of the position vector.

and the projection of the position vector.  is the distance between the origin and the point in question. The second angular coordinate is

is the distance between the origin and the point in question. The second angular coordinate is  and it is measured between

and it is measured between  and the position vector.

and the position vector.

As can be seen from the picture the change of variables from spherical coordinates to Cartesian coordinates is:

The range of each coordinate is defined to be  ,

,  and

and  .

.

— 3. Velocity and Acceleration in Curvilinear Coordinates —

Having defined the previous three systems of coordinates it is time for us to know how to write the velocity and acceleration of a particle in these systems of coordinates.

As we already saw for Cartesian coordinates one has the following relationships:

Our task will be to see how these equations translate when we are dealing with polar, cylindrical and spherical coordinates.

— 3.1. Polar Coordinates —

Has stated previously when we are using curvilinear coordinates the basis vector change from point to point. Hence one has to use Leibniz rule when calculating derivatives.

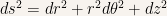

If we have two points that are infinitely close to each other,  and

and  (the reason for using superscripts will be evident right away) the infinitesimal variation in

(the reason for using superscripts will be evident right away) the infinitesimal variation in  is

is  and for the angular infinitesimal variation it is

and for the angular infinitesimal variation it is

The previous relationships allows one to see that the infinitesimal variation for  is perpendicular to

is perpendicular to  and that the infinitesimal variation for

and that the infinitesimal variation for  is perpendicular to

is perpendicular to  . One can show this rigorously but I’ll only state the result that it is

. One can show this rigorously but I’ll only state the result that it is

Hence

Thus the velocity can be thought has having a radial component and an angular component. The radial component is  and is a measure for the variation of velocity in magnitude. The radial component is

and is a measure for the variation of velocity in magnitude. The radial component is  and it measures the rate of change in direction for the position vector.

and it measures the rate of change in direction for the position vector.

The  term is so important and appears so many times that it deserves its own special name. It called the angular velocity, it is represented by the symbol

term is so important and appears so many times that it deserves its own special name. It called the angular velocity, it is represented by the symbol  and if one is interested in an vector representation it is

and if one is interested in an vector representation it is  .

.

For the acceleration it is

Hence the radial component of the acceleration is  and the angular component is

and the angular component is  .

.

— 3.2. Cylindrical Coordinates —

For cylindrical coordinates the infinitesimal line element is  . Hence one can show that it is

. Hence one can show that it is

— 3.3. Spherical Coordinates —

For spherical coordinates the infinitesimal line element is  . And for the velocity it is

. And for the velocity it is

— 4. Vector Calculus —

In this part of our post we’ll take a very brief look at some concepts of vector calculus that are useful to Physics.

— 4.1. Gradient, Divergence and Curl —

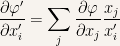

If one has  a scalar function, continuous and differentiable, it is by definition

a scalar function, continuous and differentiable, it is by definition  . From this it follows

. From this it follows

The inverse coordinate transformation is  . If we calculate the derivative of

. If we calculate the derivative of  with respect to

with respect to  it is

it is

Hence it is  . From what we’ve seen in the last post this means that the function

. From what we’ve seen in the last post this means that the function  is the j-th component of a vector.

is the j-th component of a vector.

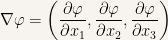

If we introduce the operator  (sometimes called del) whose definition is

(sometimes called del) whose definition is

we can define the gradient of a scalar function,  to be

to be  .

.

The divergence of a vector field,  , is a scalar and is defined to be:

, is a scalar and is defined to be:

If the divergence of vector field is positive at a given point that means that in that point a source of the field is present. If the divergence of the field is negative that means that a sink of the field is present.

Another vector entity that one can define with the del operator is the curl of a vector field. The curl is a vector entity too and its definition is:

The last operator that we’ll define in this section is the Laplacian. This operator is the result of applying the del operator two times and it is

, over a volume is also a vector and the result is given by the following expression:

over an area is what is called surface integration.

over the surface

in question. Thus what we need to define first is the normal of a surface at a given point.

will be this normal. We still have the ambiguity of having two possible directions for the normal at any given point, but this is taken care of by defining the normal to be on the outward direction of a closed surface.

(

for example) with

and

. Again we have to consider the normal of

, but this time the quantity of interest is:

is an element of length along

and is taken to be positive along the direction in which the path is defined.