— 1. Stationary states —

In the previous posts we’ve normalized wave functions, we’ve calculated expectation values of momenta and positions but never at any point we’ve made a quite logical question:

How does one calculate the wave function in the first place?

The answer to that question obviously is:

You have to solve the Schroedinger equation.

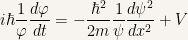

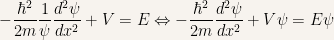

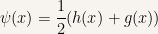

The Schroedinger equation is

Which is partial differential equation of second order. Partial differential equations are very hard to solve whereas ordinary differential equations are easily solved.

The trick is to to turn this partial differential equation into ordinary differential equation.

To do such a thing we’ll employ the separation of variables technique.

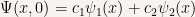

We’ll assume that  ca be written as the product of two functions. One of the functions is a function of the position alone whereas the other function is solely a function of

ca be written as the product of two functions. One of the functions is a function of the position alone whereas the other function is solely a function of  .

.

This restriction might seem as overly restrictive to the class of solutions of the Schroedinger Equations, but in this case appearances are deceiving. As we’ll see later on more generalized solutions of the Schroedinger Equation can be constructed with separable solutions.

Calculating the appropriate derivatives for  yields:

yields:

and

Substituting the previous equations into the Schroedinger equation results is:

Dividing the previous equality by

Now in the previous equality the left-hand side is a function of  while the right-hand side is a function of

while the right-hand side is a function of  (remember that by hypothesis

(remember that by hypothesis  isn’t a function of

isn’t a function of  ).

).

These two facts make the equality expressed in the last equation require a very fine balance. For instance if one were to vary  without varying

without varying  then the right-hand side would change while left-hand side would remain the same spoiling our equality. Evidently such a thing can’t happen! Te only way for all equality to hold is that both sides of the equation are in fact constant. That way there’s no more funny business of changing one side while the other remains constant.

then the right-hand side would change while left-hand side would remain the same spoiling our equality. Evidently such a thing can’t happen! Te only way for all equality to hold is that both sides of the equation are in fact constant. That way there’s no more funny business of changing one side while the other remains constant.

For reasons that will become obvious in the course of this post we’ll denote this constant (the so-called separation constant) by  .

.

and for the second equation

The first equation of this group is ready to be solved and a solution is

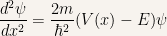

The second equation, the so-called time-independent Schroedinger equation can only be solved when a potential is specified.

As we can see the method of separable solutions had lived to my promise. With it we were able to produce two ordinary equations which can in principle be solved. In fact one of the equations is already solved.

At this point we’ll state a few characteristics of separable solutions in order to better understand their importance (of one these characteristics was already hinted before):

- Stationary states

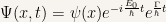

The wave function is

and it is obvious that it depends on  . On the other hand the probability density doesn’t depend on

. On the other hand the probability density doesn’t depend on  . This result can easily be proven with the implicit assumption that

. This result can easily be proven with the implicit assumption that  is real (in a later exercise we’ll see why

is real (in a later exercise we’ll see why  has to be real).

has to be real).

If we were interested in calculating the expectation value of any dynamical variable we would see that those values are constant in time.

In particular  is constant in time and as a consequence

is constant in time and as a consequence  .

.

- Definite total energy

As we saw in classical mechanics the Hamiltonian of a particle is

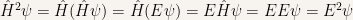

Doing the appropriate substitutions the corresponding quantum mechanical operator is (in quantum mechanics operators are denoted by a hat):

Hence the time-independent Schroedinger equation can be written in the following form:

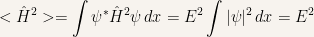

The expectation value of the Hamiltonian is

It also is

Hence

So that the variance is

In conclusion in a stationary state every energy measurement is certain to return the value  since that the energy distribution has value

since that the energy distribution has value  .

.

- Linear combination

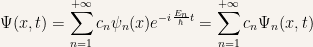

The general solution of the Schroedinger equation is a linear combination of separable solutions.

We’ll see in future examples and exercises that the time-independent Schroedinger equation holds an infinite number of solutions. Each of these different wave functions is associated with a different separation constant. Which is to say that for each allowed energy level there is a different wave function.

It so happens that for the time-dependent Schroedinger equation any linear combination of a solution is itself a solution. After finding the separable solutions the task is to construct a more general solution of the form

The point is that every solution of the time-dependent Schroedinger equation can be written like this with the initial conditions of the problem being being studied fixing the values of the constants  .

.

I understand that all of this may be a little too abstract so we’ll solve a few exercises to make it more palatable.

As an example we’ll calculate the time evolution of a particle that starts out in a linear combination of two stationary states:

For the sake of our discussion let’s take  and

and  to be real.

to be real.

Hence the time evolution of the particle is simply:

For the probability density it is

![{\begin{aligned} |\Psi(x,t)|^2 &= \left( c_1\psi_1(x)e^{i\frac{E_1}{\hbar}t}+c_2\psi_2(x)e^{i\frac{E_2}{\hbar}t} \right) \left( c_1\psi_1(x)e^{-i\frac{E_1}{\hbar}t}+c_2\psi_2(x)e^{-i\frac{E_2}{\hbar}t} \right)\\ &= c_1^2\psi_1^2+c_2^2\psi_2^2+2c_1c_2\psi_1\psi_2\cos\left[ \dfrac{E_2-E_1}{\hbar}t \right] \end{aligned}}](https://s0.wp.com/latex.php?latex=%7B%5Cbegin%7Baligned%7D+%7C%5CPsi%28x%2Ct%29%7C%5E2+%26%3D+%5Cleft%28+c_1%5Cpsi_1%28x%29e%5E%7Bi%5Cfrac%7BE_1%7D%7B%5Chbar%7Dt%7D%2Bc_2%5Cpsi_2%28x%29e%5E%7Bi%5Cfrac%7BE_2%7D%7B%5Chbar%7Dt%7D+%5Cright%29+%5Cleft%28+c_1%5Cpsi_1%28x%29e%5E%7B-i%5Cfrac%7BE_1%7D%7B%5Chbar%7Dt%7D%2Bc_2%5Cpsi_2%28x%29e%5E%7B-i%5Cfrac%7BE_2%7D%7B%5Chbar%7Dt%7D+%5Cright%29%5C%5C+%26%3D+c_1%5E2%5Cpsi_1%5E2%2Bc_2%5E2%5Cpsi_2%5E2%2B2c_1c_2%5Cpsi_1%5Cpsi_2%5Ccos%5Cleft%5B+%5Cdfrac%7BE_2-E_1%7D%7B%5Chbar%7Dt+%5Cright%5D+%5Cend%7Baligned%7D%7D&bg=f7f3ee&fg=000000&s=0&c=20201002)

As we can see even though  and

and  are stationary states and hence their probability density is constant the probability density of the final wave function oscillates sinusoidally with angular frequency

are stationary states and hence their probability density is constant the probability density of the final wave function oscillates sinusoidally with angular frequency  .

.

Prove that for for normalizable solutions the separation constant  must be real.

must be real.

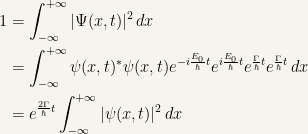

Let us write  as

as

Then the wave equation is of the form

The final expression has to be equal to  to all values of

to all values of  . The only way for that to happen is that we set

. The only way for that to happen is that we set  . Thus

. Thus  is real.

is real.

Show that the time-independent wave function can always be taken to be a real valued function.

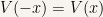

We know that  is a solution of

is a solution of

Taking the complex conjugate of the previous equation

Hence  is also a solution of the time-independent Schroedinger equation.

is also a solution of the time-independent Schroedinger equation.

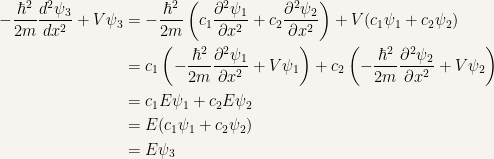

Our next result will be to show that if  and

and  are solutions of the time-independent Schroedinger equation with energy

are solutions of the time-independent Schroedinger equation with energy  then their linear combination also is a solution to the time-independent Schroedinger equation with energy

then their linear combination also is a solution to the time-independent Schroedinger equation with energy  .

.

Let’s take

as the linear combination.

After proving this result it is obvious that  and that

and that  are solutions to the time-independent Schroedinger equation. Apart from being solutions to the time-independent Schroedinger equation it is also evident from their construction that these functions are real functions. Since they have same value for the

are solutions to the time-independent Schroedinger equation. Apart from being solutions to the time-independent Schroedinger equation it is also evident from their construction that these functions are real functions. Since they have same value for the  as

as  we can use either one of them as a solution to the time-independent Schroedinger equation

we can use either one of them as a solution to the time-independent Schroedinger equation

If  is an even function than

is an even function than  can always be taken to be either even or odd.

can always be taken to be either even or odd.

Since  is even we know that

is even we know that  . Now we need to prove that if

. Now we need to prove that if  is a solution to the time-independent Schroedinger equation then

is a solution to the time-independent Schroedinger equation then  also is a solution.

also is a solution.

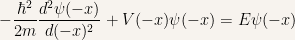

Changing from  to

to  in the time-independent Schroedinger equation

in the time-independent Schroedinger equation

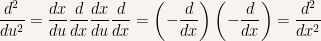

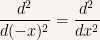

in order to understand what’s going on with the previous equation we need to simplify

Let us introduce the variable  and define it as

and define it as  . Then

. Then

And for the second derivative it is

In the last expression  is a dummy variable and thus can be substituted by any other symbol (also see this post Trick with partial derivatives in Statistical Physics to see what kind of manipulations you can do with change of variables and derivatives). For convenience we’ll change it back to

is a dummy variable and thus can be substituted by any other symbol (also see this post Trick with partial derivatives in Statistical Physics to see what kind of manipulations you can do with change of variables and derivatives). For convenience we’ll change it back to  and it is

and it is

So that our initial expression becomes

Using the fact that  is even it is

is even it is

Hence  is also a solution to the time-independent Schroedinger equation.

is also a solution to the time-independent Schroedinger equation.

Since  and

and  are solutions to the time-independent Schroedinger equation whenever

are solutions to the time-independent Schroedinger equation whenever  is even we can construct even and odd functions that are solutions to the time-independent Schroedinger equation.

is even we can construct even and odd functions that are solutions to the time-independent Schroedinger equation.

The even functions are constructed as

and the odd functions are constructed as

Since it is

we have showed that any solution to the time-independent Schroedinger equation can be expressed as a linear combination of even and odd functions when the potential function is an even function.

Show that  must exceed the minimum value of

must exceed the minimum value of  for every normalizable solution to the time-independent Schroedinger equation.

for every normalizable solution to the time-independent Schroedinger equation.

Rewriting the time-independent Schroedinger equation in order of its second x derivative

Let us proceed with a proof by contradiction and assume that we have  . Using the previous equation this implies that

. Using the previous equation this implies that  and

and  have the same sign. This comes from the fact that

have the same sign. This comes from the fact that  is positive.

is positive.

Let us suppose that  is always positive. Then

is always positive. Then  is also always positive. Hence

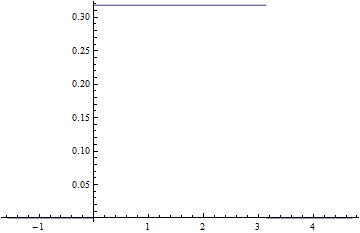

is also always positive. Hence  is concave up. In the first quadrant the graph of the function is shaped like

is concave up. In the first quadrant the graph of the function is shaped like

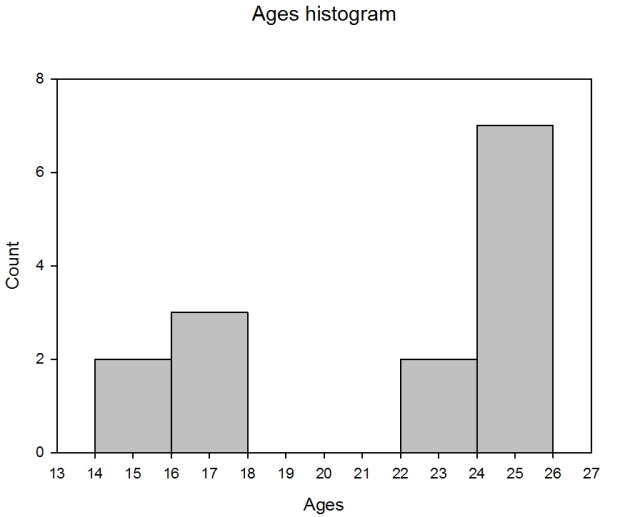

Since by hypothesis our function is normalizable it needs to go to  as

as  . in order for the function to go to

. in order for the function to go to  the plot needs to do something like this

the plot needs to do something like this

Such a behavior would imply that there is region of space where the function is positive and its second derivative is negative (in our example such a region is delimited in  ).

).

Such a behavior is in direct contradiction with the conclusion that  and

and  always have the same sign. Since this contradiction arose from the hypothesis that

always have the same sign. Since this contradiction arose from the hypothesis that  the logical conclusion is that

the logical conclusion is that  .

.

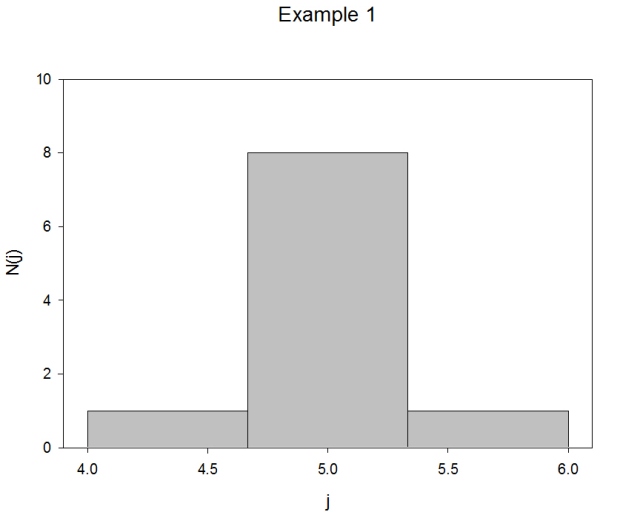

With  ,

,  and

and  no longer need to have the same sign at all times. Hence

no longer need to have the same sign at all times. Hence  can turn over to

can turn over to  and

and  can go to

can go to  .

.